FanView – Fans’ Broadcasting Solution

Overview

21st Century is in the cusp of technology revolution which is connecting people around the world. With the advent of internet and it becoming increasingly democratized (to that extent that it’s soon going to be free, (please visit www.oternet.is), mankind is all set to unleash the potential of technologies. Today’s consumers demand more and the challenge for the innovators is to deliver more for less.

Sports and entertainment industry has been in a happy position to receive most of the innovations in the field of electronics, communication and media. More and more live video consumption drives the consumers to view video wherever they are – in their homes, on the desktop, or on the go. But the technology needed to provide delivery to multiple screens over IP networks – including Flash players on personal computers, iPhones and other mobile devices, and televisions – presents significant challenges for content owners and service providers alike. The issues are many and complex, including different transport networks and protocols, a multitude of streaming servers specific to each player and device, varying bandwidths, and more.

The challenges of multiple screen multi-angle view delivery

Producers and service providers are eager to meet the growing demand for anywhere, anytime, “view all”, any screen access to video content. Unfortunately, there is no consistent video streaming protocol standard across the heterogeneity of desktop clients, mobile devices, and TV screens on the market today. Video content producers must now encode and deliver video separately for each destination screen. Consider the following three scenarios:

Scenario 1:

A video producer is streaming the latest space shuttle launch. The producer would like to enable viewers with desktop computers, iPhones, or TVs to see the video, but the streams destined for each audience must be encoded separately in formats compatible with each screen. Constrained by the significant time and expense of performing multiple encodes for delivery to the three different screens, thus the producer is limiting the availability of his content to a small subset of potential audience.

Scenario 2:

A video producer is streaming a live event – perhaps a political gathering, a film festival, or a sporting event. But due to the event’s remote location, bandwidth is extremely limited, making it impossible to send more than one stream to its destination. The producer must choose just one format and screen (desktop computer, mobile device, or TV) for the content.

Scenario 3:

A video service provider has signed on to stream all of the presentations given at a company’s annual meeting. During the event, the company’s marketing team asks if the streams can also be delivered to the employees’ iPhones/smart phones. Unfortunately the service provider did not have the necessary encoders to stream to the iPhone, and missed a lucrative opportunity to provide additional services to its key client.

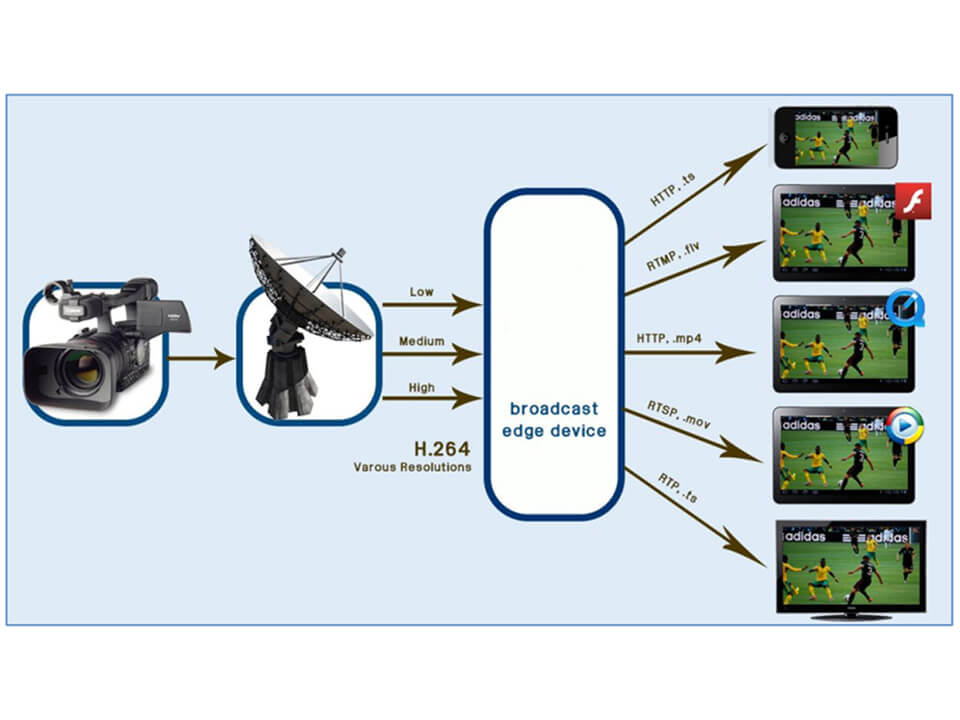

Separate encoders and networks required for each destination

While most devices and screens are converging on a common video encoding format (H.264), they differ in how that video is transported from the encoder through the distribution server/network to the screen (iPhone, computer player, TV, etc.)

Currently formatting the same live event for delivery to multiple screens / formats requires separate and respective encoders and transport protocols for each destination:

TVs

MPEG-2 transport streams with very specific formatting are delivered over IP to the set-top box. Current workflows for multi-screen delivery are complex, inefficient, and expensive for both video producers and service providers, and will not scale with the exploding demand for video content. As a result, difficult choices have to be made affecting the scope of distribution of the live video, severely limiting potential revenue and visibility for the video content. Clearly, a new model that can scale up and simplify the encoding and delivery workflow to multiple screens – without overwhelming production and network resources as well as budgets – is required.

iPhones / Android phones

The encoder delivers an MPEG-2 Transport stream over IP containing H.264 video to the server. The server (i.e., the encoder) segments the stream into a series of short files (about 30 seconds long). These files are delivered via HTTP along with an MP3 playlist that tells the iPhone what files to play.

Web Delivery

Flash players: A Flash media server delivery network “reflects” encoder streams over a proprietary streaming protocol (RTMP) connection to the viewers’ desktop Flash player. Silverlight players: The encoder delivers “fragmented” MPEG-4 files containing H.264 video to the HTTP server (typically the Microsoft IIS server), the server delivers a special “manifest” file to the Silverlight player that tells it which MP4 files to play.

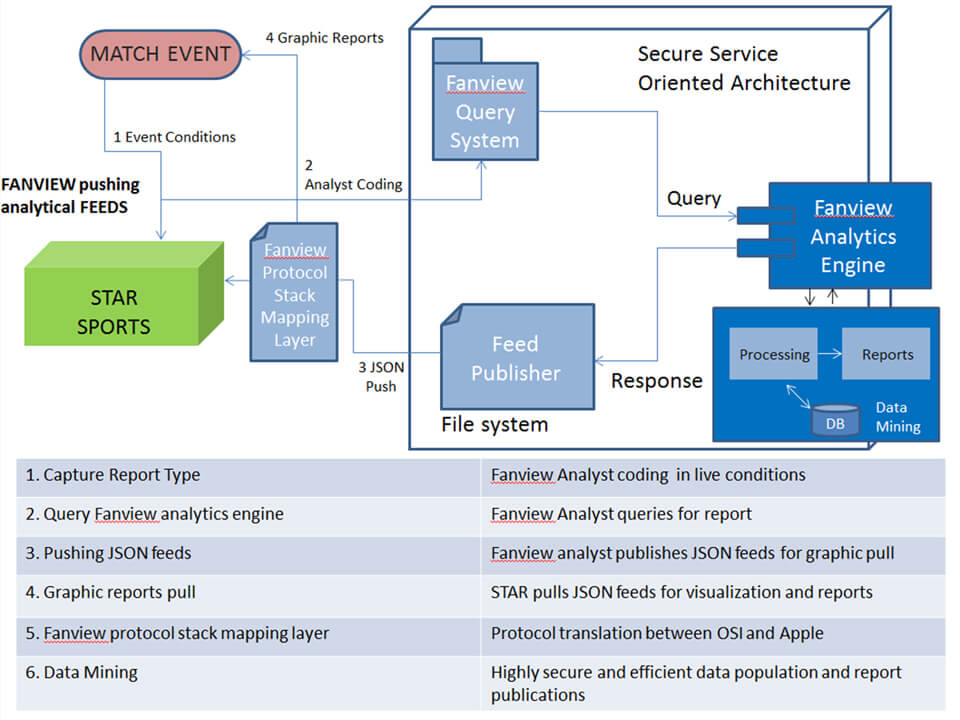

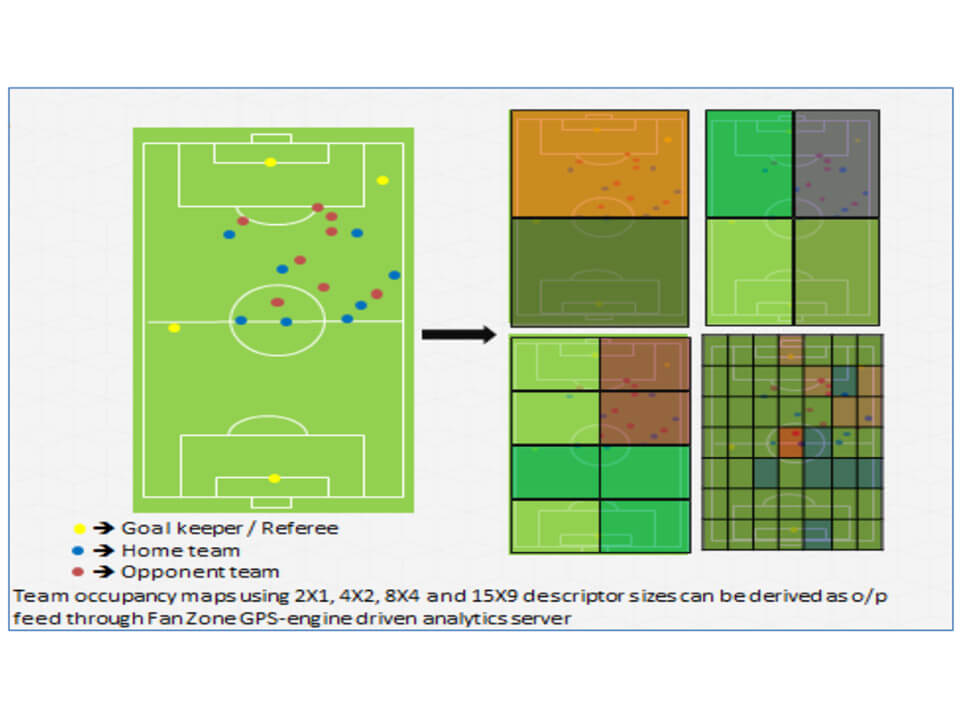

Broadcasting end venue soccer tracking architecture

Shown below is the method for high precision tracking and automatic camera selection in multiple synchronized cameras. Below system also tells how we can use video stitching in order to create a seamless stream that can follow a player. Below block gives an insight about the venue relying architecture and how different components interact to relay 360o view of the venue to the viewers. A live soccer match is enhanced with instant replays, coded in MPEG-4 format, including 3D graphics. The broadcaster creates the clips, publishes them on a server on the Internet, and notifies the viewers about their availability through the trigger link paradigm. The viewer can replay the clips by downloading them over the Internet. The clips are prepared and encoded in MPEG-4 format. The receiver contains an MPEG-4 (3D) player. In this way the viewer can interact with the replay, ultimately

choosing different viewing angles.

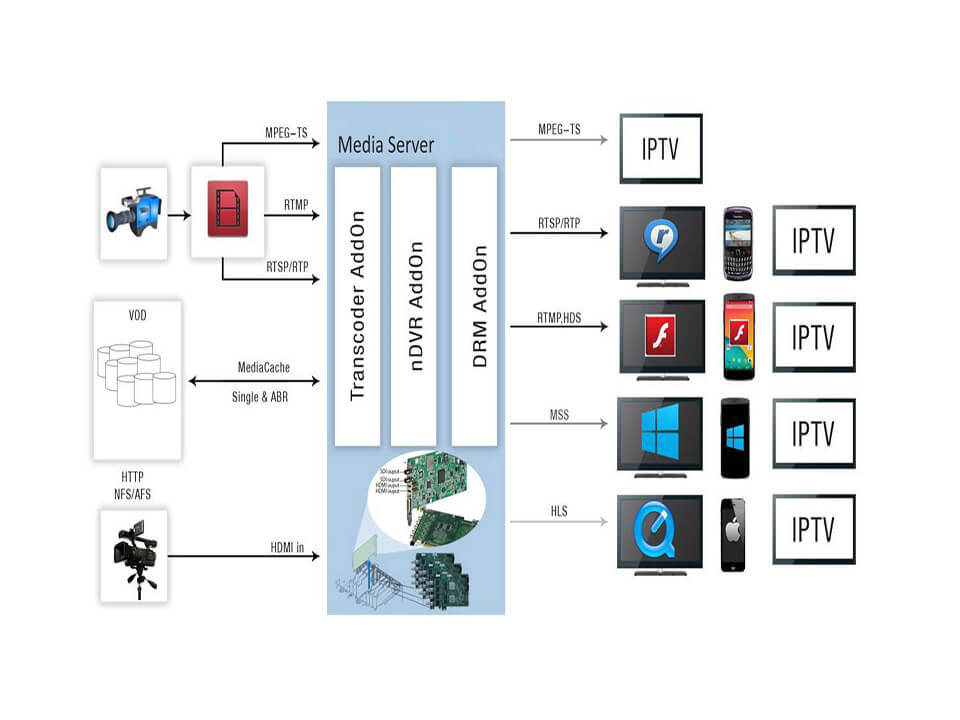

The New Solution for Multi-Screen Multi-angle Delivery

Now there is an easy and affordable new solution that eliminates the need for separate encoders and networks for delivering live video to multiple screens. This multiprotocol platform provides simultaneous media streaming to iPhone, iPod touch, Flash, Silverlight and QuickTime Web players, and IPTV set-top boxes. This solution makes it possible to live stream to multiple destinations from a single platform, without special pre-processing of source content. For a fraction of the cost of traditional broadcast hardware equipment, our platform will be designed to work just like a video switcher, controlling real-time switching between multiple live video cameras, while dynamically mixing in other source media. Features such as Chroma key, 3-D graphics, and built-in titles merge seamlessly with the layering system, enabling users to easily create live and on-demand broadcasts for broadcasters who wants to stream on to multiple screens. Different screens require different resolutions (bitrate and size) on the stream, in order to optimize the viewing experience with regards to picture quality. Our platform has the ability to simultaneously produce several different resolutions and stream these live to the streaming server. Our platform will combine these streams and deliver the appropriate resolution, over the required network, in the right format, to each screen. All this in one single workflow.

Unique features of our platform

Platform has the ability to stream to any screen with a single content encode. H.264 live streams can be ingested from conventional off-the-shelf RTMP, RTSP/RTP, or MPEG-TS encoders. Video on demand (VOD) files can be in any of the common container formats (e.g., .f4v, .mp4, .m4a, .mov, .mp4v, .3gp, and .3g2). Platform then performs all necessary protocol conversion and content adaptation for each destination client.

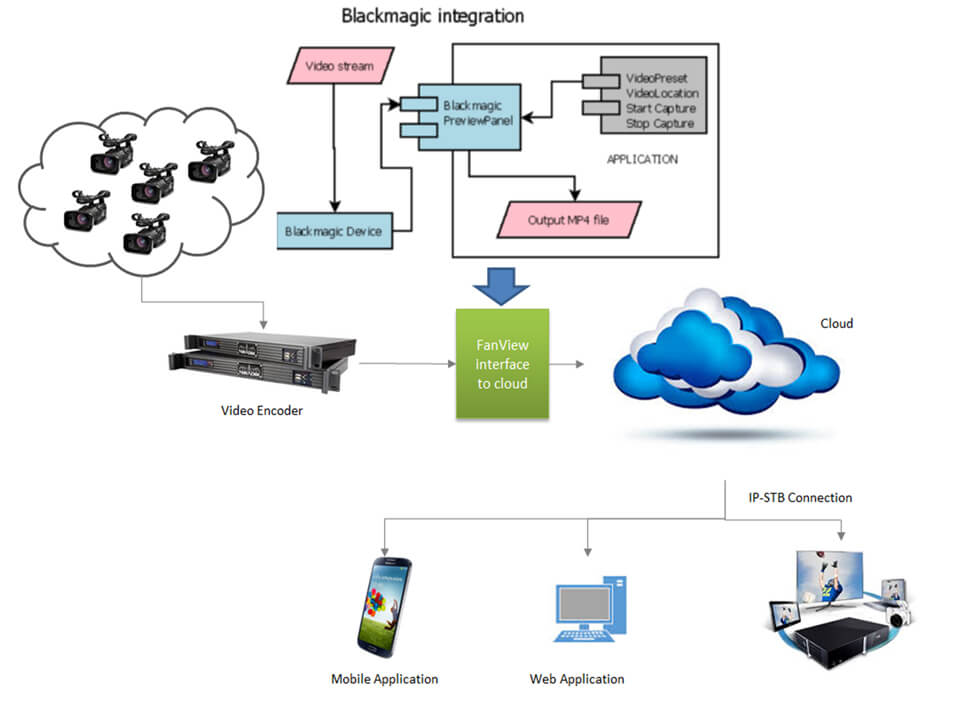

FANVIEW PLATFORM

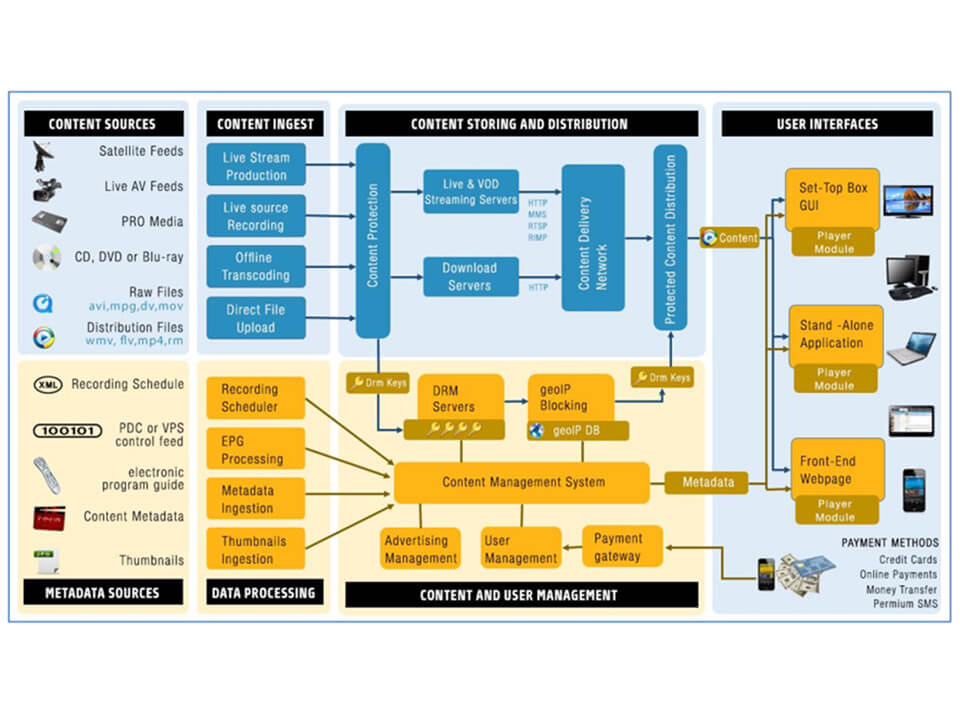

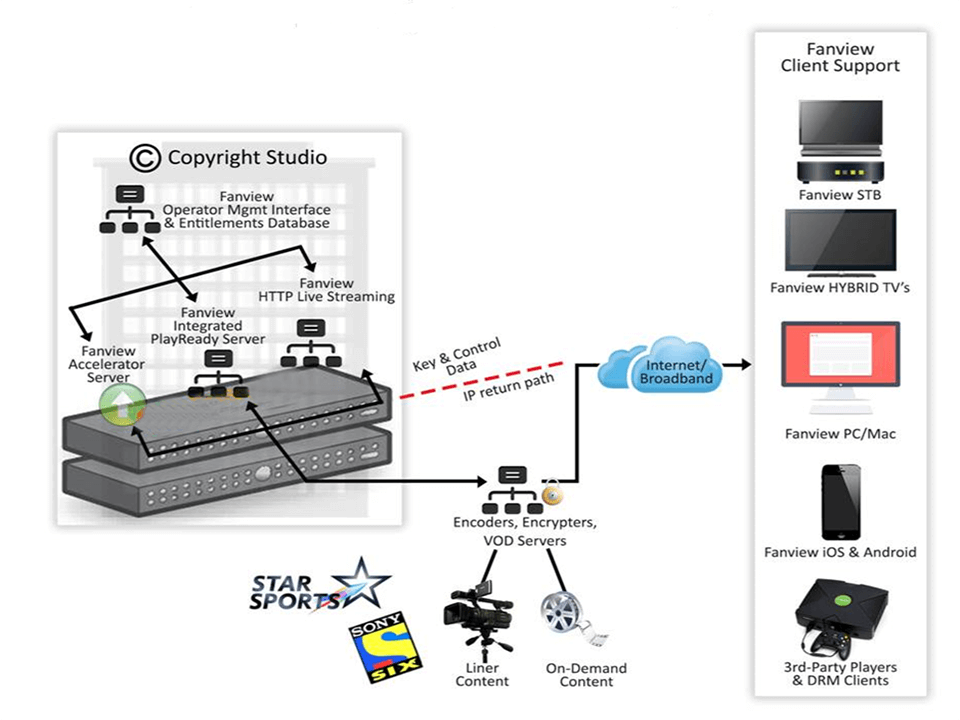

FanView’s proposed platform is an integrated content & delivery platform, developed to help broadcasters and content owners enter the challenging world of multiscreen media. It provides complete control over how brands and content are managed and monetized; taking viewer engagement to a whole new level.

- Superior viewing on any screen or device.

- Comprehensive Video on Demand services.

- Mobile apps & social interaction.

- Secure distribution – via the cloud or CDN.

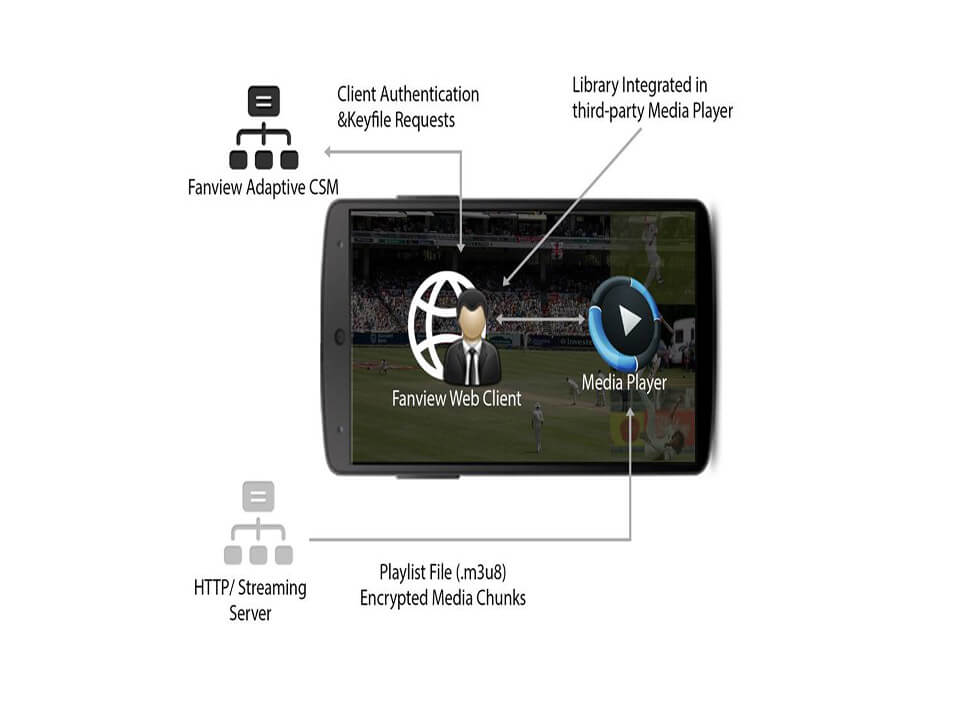

FanView platform will provide extended control over content delivery and user-experience management. It enables clients to effectively ingest, manage, monetize and distribute content to settop-boxes and Smart TVs, over the web and to any mobile device. Robust and secure, FanView Platform connects to cloud-services, global Content Delivery Networks (CDN).

Features of FanView Platform

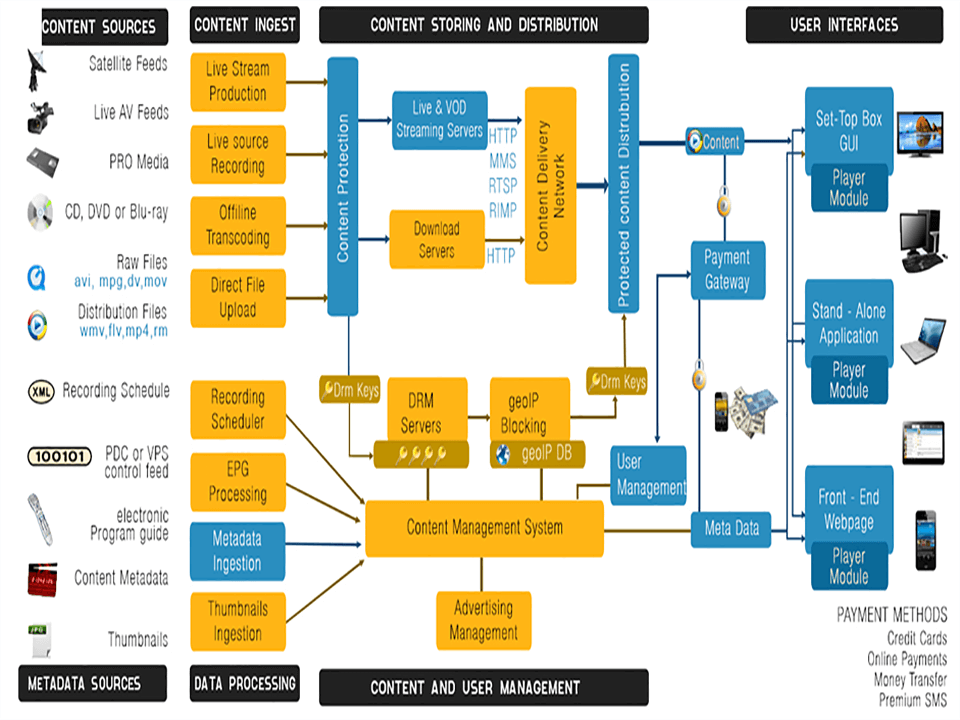

Work Flow and Ingest

- Seamless integration to legacy TV broadcast workflows

- Open architecture to interface with third party systems

- Live ingest from satellite, terrestrial, cable TV, camera live feeds

- Non-linear ingest with complete multimedia files processing and protection

- Hosting, technical supervision and service, technical support (1st or 2nd line)

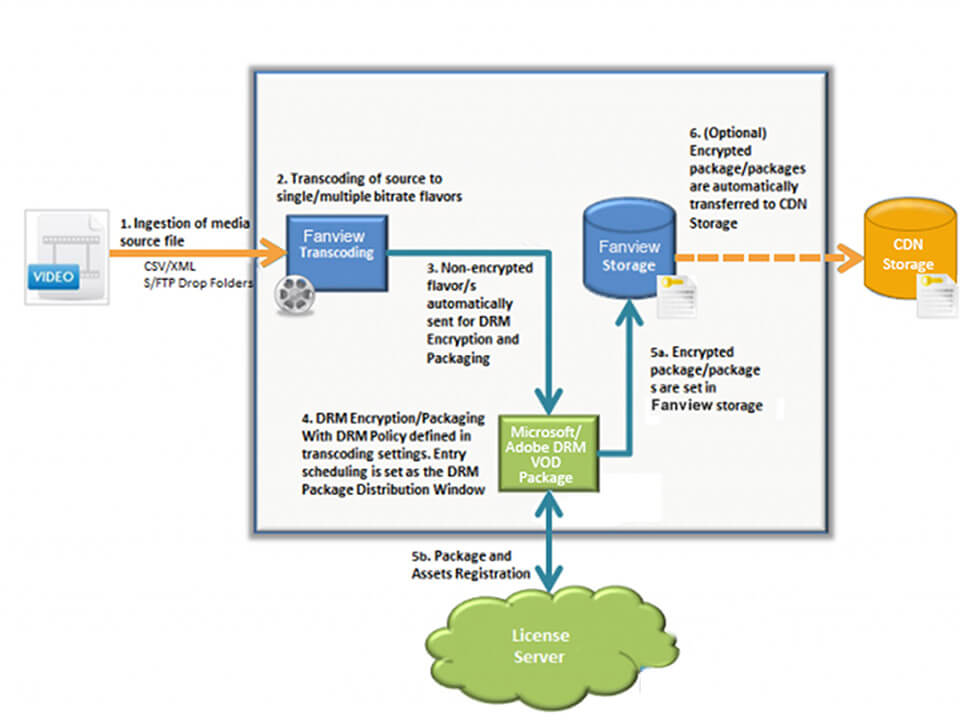

Content and user management

- Management of sport and file-based assets, including subtitles and image thumbnails

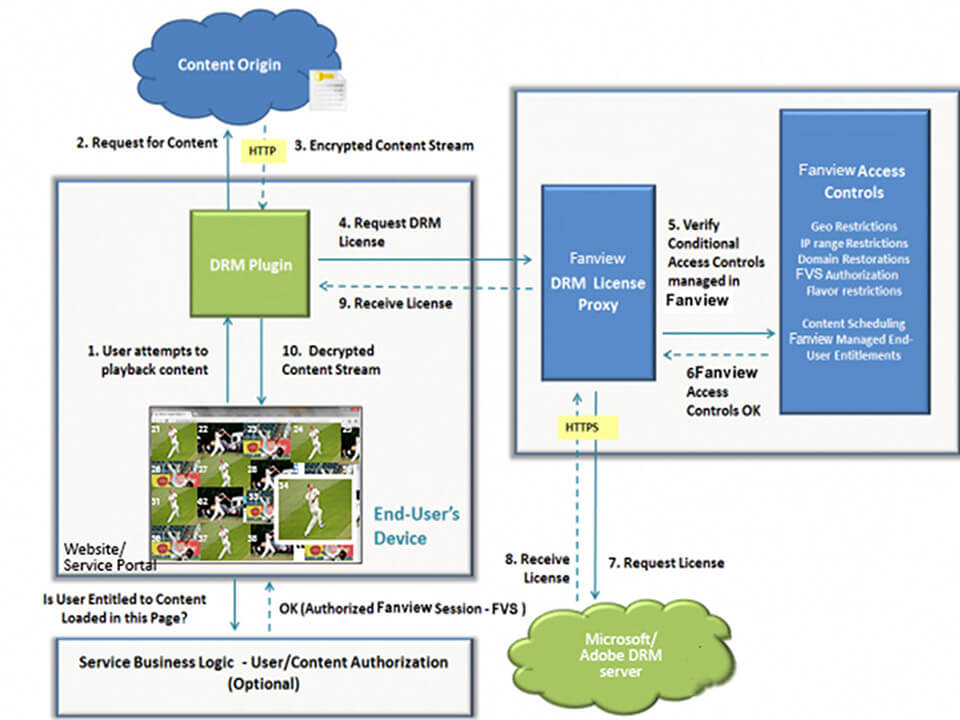

- DRM managing license keys to secure content

- Microsoft play Ready to enable sports rental with time-based licenses – Video Vault

- Content Management System (CMS), with product catalog, integrated into existing IS

- Customer Relationship Management (CRM)

Content storage and distribution

- Front-end web page presentation, following client brand guidelines

- Client Player for secure and insecure content

- Client applications for extended out-of-browser access to library media

- Mobile streaming to any device, including iOS, Android, windows

- Delivery to RTMP (Adobe Flash), RTSP, HTTP (Silverlight and Apple), MMS

- Integration into global CDNs and cloud-based services or deployment of Visual Unity’s CDN platform, providing sufficient server capacity and automatic load balancing, with secure storage of content and data reporting, outside the Cloud.

- Conditional access, with URL protection and GeoIP visitor geo-location

Business and Revenue management

- Integrated Payment Gateway

- Balance Management – keeping information of the balances on accounts of virtual users

- Database transactions – detailed information about all payment transactions within the system

- Billing – accounting Module Linked to client’s billing or IS system Eg. Use-case Revenue potential for soccer match

| Scenario | Revenue Potential | Revenue Stream |

| Football | · Place bets- Prior to game and during game · Watch web-cast of post-match interviews for a fee · Build user communities – provide match commentary off-line, vote for best player/goal, etc. · Charge users to read premium content (hot and Cold statistics; 3D graphics replays; different viewpoints) · Multi-Cam , Multi-angle views · Sell merchandise on & off-line e.g. f/ball kit, books · Charge subscription fees for web-site |

· Betting Revenue · Web-Cast Revenue · Retail Revenue · Subscription Revenue · Pay-per-View (of video clips) · Shared Revenue (from co creation) |

End viewers visualize 3600 of the video broadcast

Our proposed FanView platform after capturing the end-to-end broadcast video then stitches, streams and displays the video capsules to provide an immersive panoramic consumer experience for STB, tablets, smartphones and virtual reality headsets viewers.

To produce a 360° video broadcast a scene is shot with multiple cameras that capture all possible viewing angles. These various views are then stitched together into a single high resolution video sphere that is encoded and streamed using existing standards. On the receiving end, viewers will be able to choose and dynamically modify their field of view by either moving the display or touching the screen.

FanView’s STB-TV platform demographic breakdown and interactivity attractiveness

| User Group | FanView enabled platform Soccer viewing prediction |

| Children | Element of fun – football Animation, competition |

| Teenagers | Entertainment (football) , Novelty – 3D camera angles |

| 25-34 Year-olds | Need to be entertained and Socialize |

| Over 40’s | Meaningful interaction replay, stats. for more information, betting |

Use-Case 1

Broadcaster can provide feature to view multi-cam/multi-angle view by live broadcast of a different camera views over multiple sub-channels as a pay-by-use model , not only does the broadcaster have control over multiple view angles, but the viewer also can access any of the multiple viewing angles of interest. Consumers can use his/her remote control to zoom or pan the entire soccer field to track his favorite player. The entire bird’s eye view of the playing field is captured by multiple, fixedposition high resolution (at least 4K x 2K) cameras spanning the complete soccer field. Each sub‐channel view is captured by multiple Super HDTV cameras positioned at different angles. Zoomed or panned views requested by a given viewer are extracted from the images taken from the appropriate camera and simultaneous decoding of the multiple sub channels is performed within the receiver. The client device specifies the desired resolution of the streming channel.

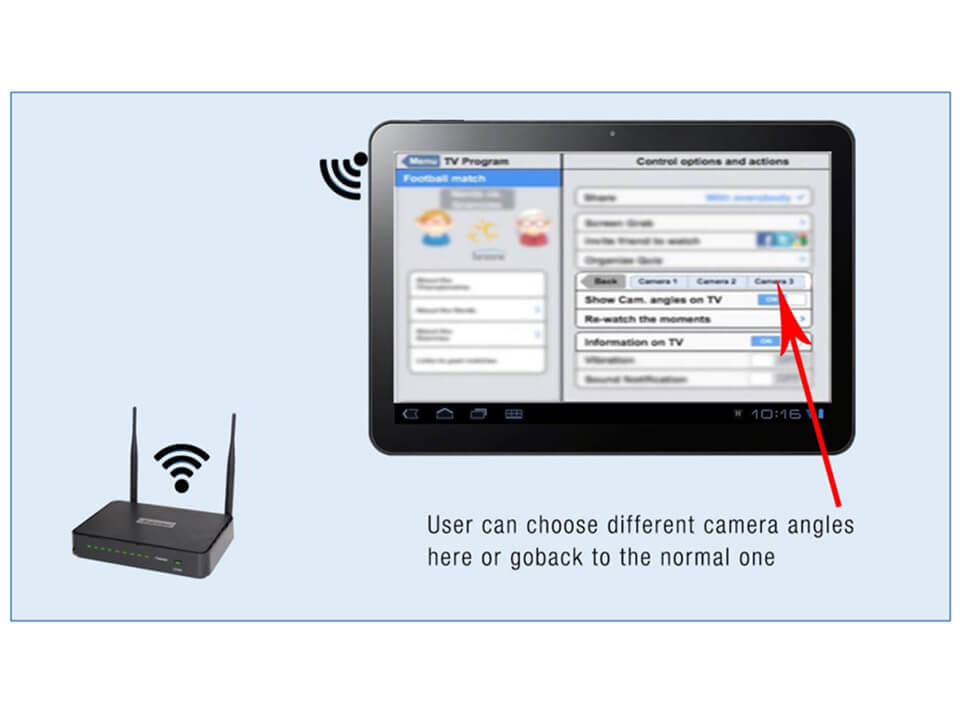

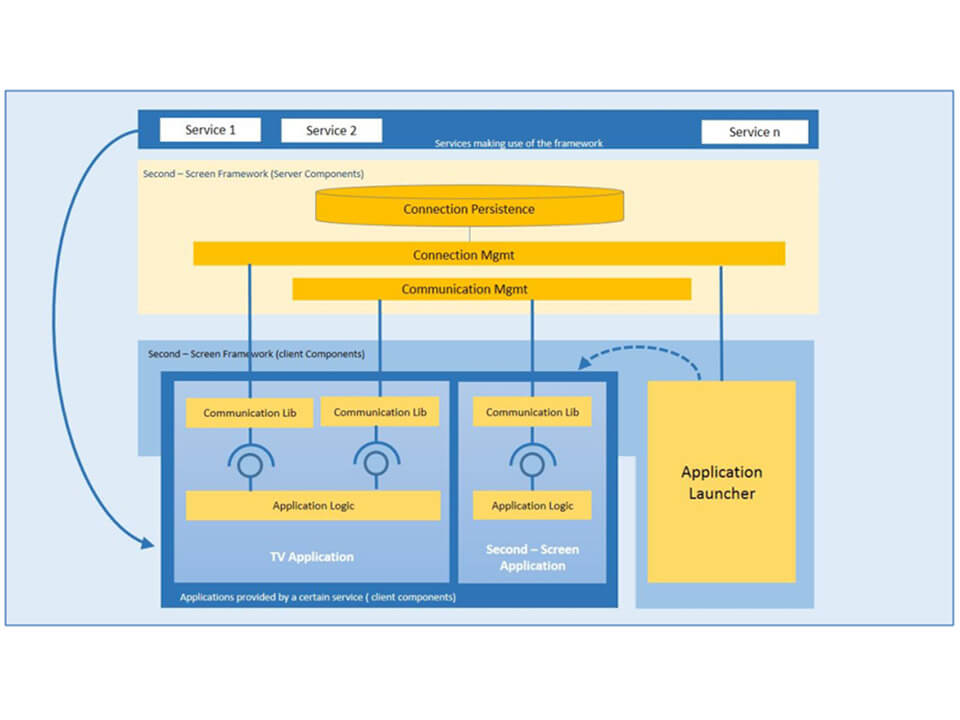

Second screen framework components

Shown below are the components of the second screen framework. The framework components can be distinguished between those running on the server side and those running on the client side. The interfaces between the framework and the client application are highlighted.

Connection management server

The Connection Mgmt component at the server handles the device discovery and the device connection. It generates random but unique identifiers and generates the QR-code to be displayed on the TV device for the discovery process. It ensures that the second-screen device, that scans the QR-code and loads the encoded URL, is associated to the TV device. It makes sure that pairs of device IDs get persisted in the Connection Persistence so that connected devices remain associable and the discovery process needs to be executed only on time. Furthermore the Connection Mgmt server component provides the Connection Manager and the Application Launcher web application

Connection Manager (client)

The Connection Manager client component is an TV compliant web application running in the browser of the TV device. It handles the user interaction with the Framework server components and provides dialogues for multiple purposes, e.g. QR-code, disconnect devices, help texts etc. The Connection Manager application is launched when the appropriate API call is called by the web application using the Second-Screen Framework. The Connection Manager is typically launched when the user wants to connect a new second-screen device to its TV device.

Connection Lib (client)

Connection Lib client component is a JavaScript-library that provides wrapper classes in order to facilitate the communication with Connection Mgmt server component. It provides API calls in order to launch the Connection Manager application in the browser of the TV device and calls to request the connection status.

Application Launcher (client)

Application Launcher component is responsible for the automatic launch of applications on the second screen. It receives URLs to applications that are to be launched on the second-screen device. The application should be running after the scanning of the QR-code and whenever an application is to be launched. When the Application Launcher receives a URL it automatically requests the browser to load the appropriate resource.

Communication Mgmt (server)

The Communication Mgmt server component is responsible for the exchange of messages between connected devices. Clients send messages for connected devices to the Communication Mgmt server component. Clients register for messages from connected devices at the server. Whenever a message for a device that registered is available the server submits it to them.

Communication Lib (client)

The Communication Lib client component is a JavaScript-library that provides wrapper classes in order to facilitate the communication with Communication Mgmt server component. It provides API calls in order to register for messages from connected devices and to send messages to connected devices.

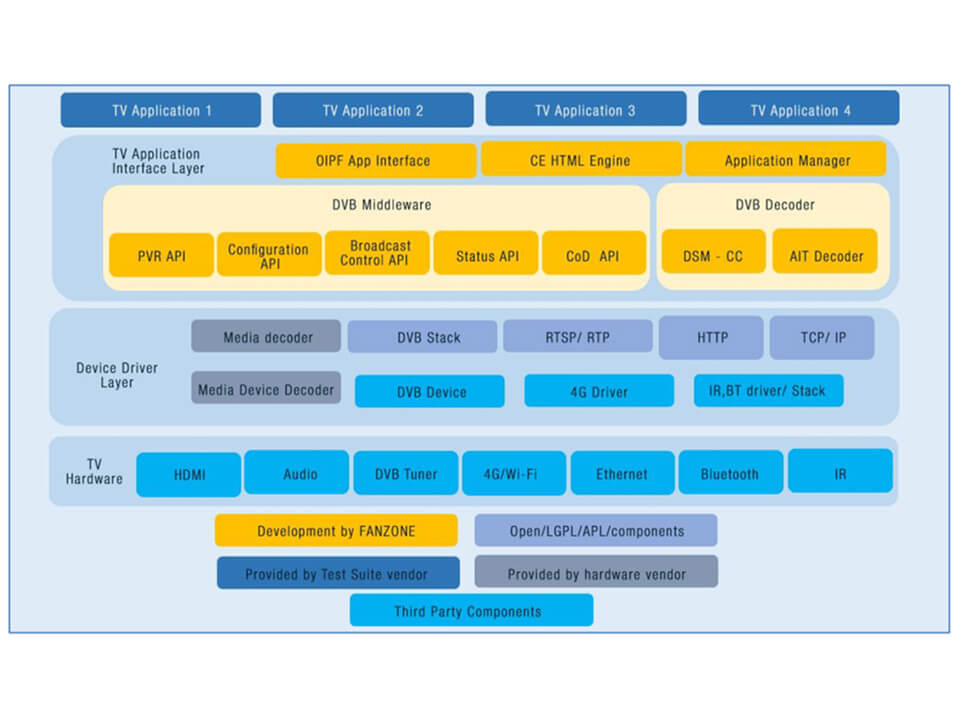

On the STB side our offerings

Our offerings will be ready for deployment on suitable hardware and is composed of multiple open standards, such as Open IP TV forum specifications (OIPF), Consumer Electronics-HTML (CE-HTML), the World Wide Web Consortium (W3C), and Digital Video Broadcast (DVB).

The components delivered as part of the solution are:

- OIPF extensions – the Application Programming Interface (APIs) defined for TV applications CE-HTMLengine

- Multimedia interface to play DVB and Internet media

- Middleware APIs for PVR, Configuration and Setting

- Broadcast control, Status, Content on Demand functionality

- DSM-CC and AIT modules to decode the broadcast stream

FanView is designed and developed protocol stack handshake will happen once the pay-per-service has been activated. Protocol firmware upgrade happens on the STB upon feature/service activation. Shown below are the protocol stack components that will be developed by FanView .

For broadcasters

Ensures better control over deployed STB/TV by means of faster upgrade with latest firmware. Enables different usage patterns and business models through interactive TV apps. Makes the use of legacy hardware for deployment possible as it is an optimized solution with a smaller footprint that enables low-end set top-box hardware easily adds to the ease of use for support personnel by means of its built-in error reporting mechanism that allows remote trouble-shooting.

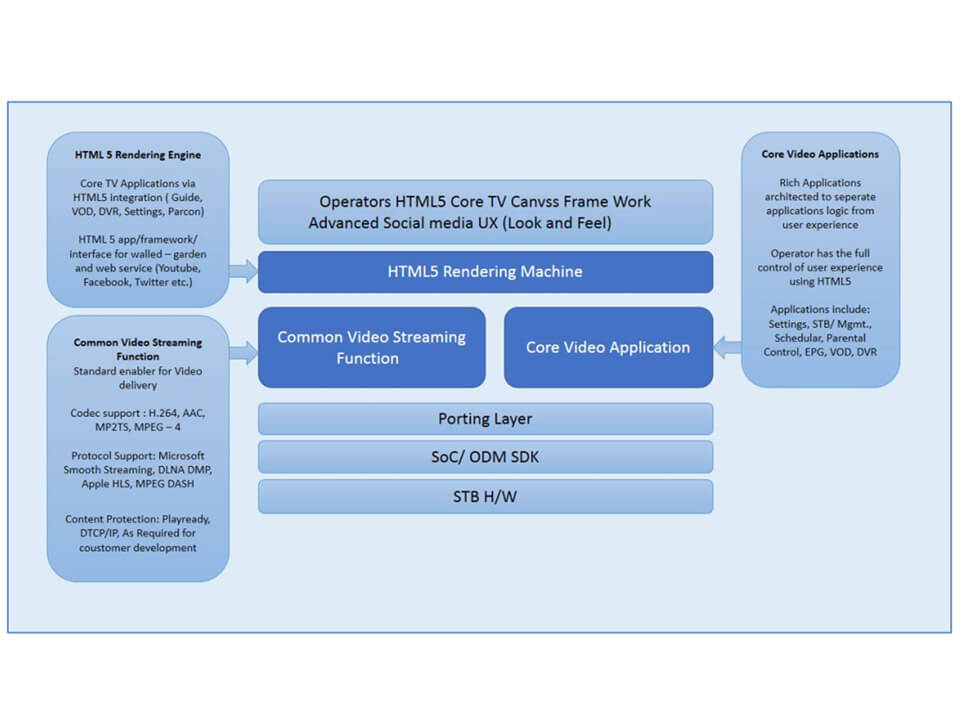

New STB Client Architecture

By leveraging the new STB client architecture based on HTML5, advanced SOCs, web standard technologies TV operators can achieve the following benefits:

- Respond more quickly to consumers and competitors with rapid service and application innovation through tight coupling between the STB hardware vendor and quicker integration between TV apps.

- Faster and less expensive introduction of new STB hardware and associated features through lower costs and faster software porting.

High impact user experiences based on industry standard web approaches coupled with the ability to render rich graphics.

Shown below tables showcase the traditional-transitional-high capacity planned STB models capabilities

Shown below is the ideal STB architecture , client software will take full advantage of SoC and STB software functions and provides a porting layer to facilitate fast and low cost porting between platforms. The client layer includes must including the following elements.

As a first element it requires an HTML5-based UI rendering engine to achieve several key objectives. This provides maximum UI flexibility and consistency with other IP devices. The second element of the client layer requires common enabling functions must support core video applications including STB management, conflicts scheduling management, scheduling, parental controls, EPG, DVR and VOD. It should also provide trigger handling for advertisements and merchandizing. Third element of the client layer highly optimized architecture to separate application logic from the user experience. As a fourth element the client should offer a porting layer to attain the lowest possible time and cost of porting between STBs and SoCs. This is achieved by abstracting the hardware-specific items from the application layer.

For Digital TV and set top box

- Facilitates faster deployment of TV-capable Set top boxes and TVs by virtue of being a preconfigured modular solution.

- Interactive capability of a set-top box is a function of the network connectivity and the DVB broadcast system has the ability to utilize a return channel between a set-top box and the broadcaster, or service provider, to deliver data.

- Enhances customer experience with its low boot times and quick response times

- Allows quick deployment and troubleshooting as a result of on-the-fly updates

- Allows integration with personal media

TR-135 (Data Model Extensions designed for specific devices with flexibility for adding new devices and combining services) implementation enables manageability from remote auto-configuration servers.

FanView mockup preview panel player window

Shown below is the mockup screen showcases how FanView’s platform preview player window can do a follow, zoom in on particular player(s), and play back events from the game in panorama video and camera switching mode. Metadata enables sub-systems to interoperate and to dynamic configurable by users and for processes to be orchestrated. Several major types of metadata can be specified about sports events, sports incidences, athletes, objects of interest, cameras, video frames and streams, user interface layouts and personal profiles. Sport Information System creates and gathers metadata about sports incidents which is synchronized to the video streams. This information will be gleaned from broadcasters as data feeds.

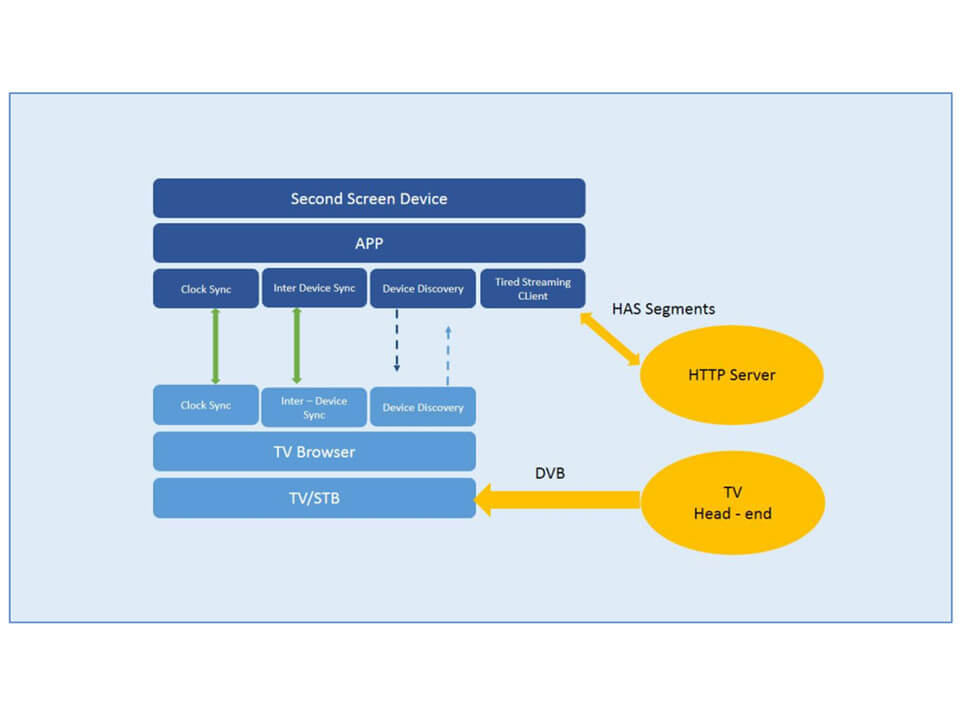

Second screen services synchronizing with main TV Screen

On the TV/STB side, there will be a TV application which features modules for clock synchronization, inter-device synchronization and device discovery. On the second-screen side, there will be tiled Streaming application which will be extended with modules that form the counterparts of those in the TV/STB. Whereas the main input for the TV/STB consists of the DVB stream, the second-screen application retrieves Tiled Streaming segments from a standard web server via HTTP. Platform will use timestamp-based inter-device synchronization system allowing for frame-accurate synchronization between DVB streams and over-the-top HTTP adaptive streaming content. As a showcase of this technology we can implement a second screen application that will allow users to freely navigate (e.g. pan/tilt/zoom) around an ultra-high resolution video panorama which is synchronized with a main DVB stream shown on the TV. Using this technology, users will have the freedom to navigate spatially through a football match, without missing anything of the action on the main TV screen.

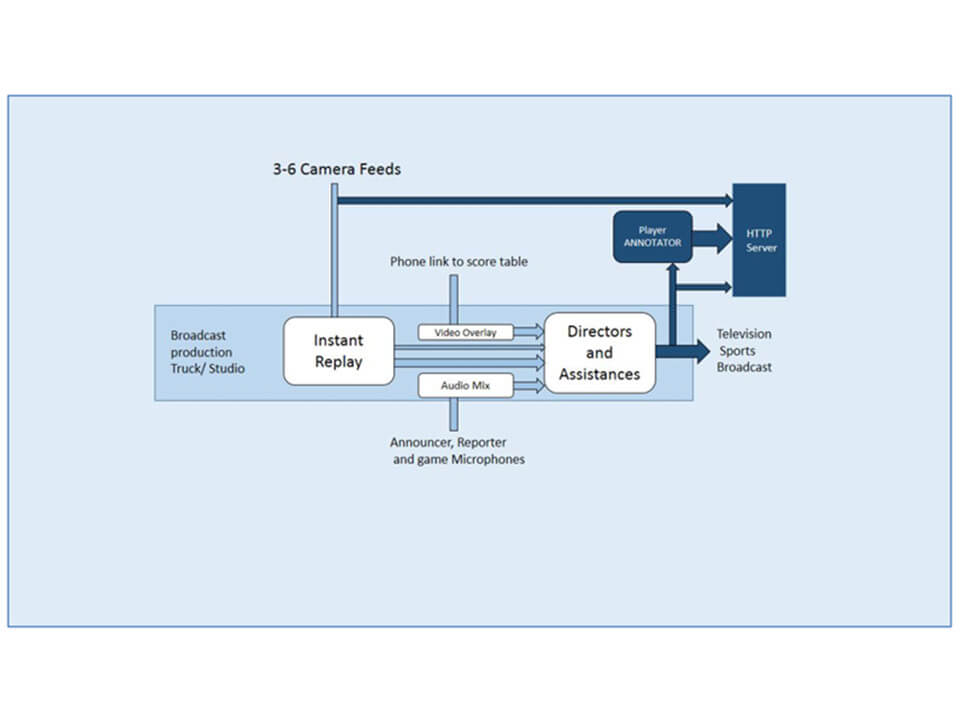

Enabling broadcasters to provide interactive sports experience

In this mode we will take all of the raw camera feeds and ingest them digitally onto an HTTP server. We do the same with the fully mixed broadcast feed that comes from the director’s station. In addition, the broadcast feed is passed into the annotator SW framework where one or two staff members add the necessary annotation information to create the game annotation file (GAF) which is also uploaded to the server.

Created sport definition file will contain information about the structure of the sport. This file need only be created once for each sport and serves to specialize the annotation and player software to that particular sport. The primary role of the SW framework annotator is to add time markings for the start and end of each navigation unit described in the sport definition file. All of the camera feeds as well as the mixed broadcast feed are time synchronized.

It is clear that controls such as “next play” and “change camera” are of great interest to fans. It is also clear that fans can very rapidly learn interactive controls using on-screen templates of the controls. There do not seem to be any usability barriers to fan adoption of the technology.

The viewer controls that we can offer to the fans can be:

- standard play, pause, fast forward, rewind

- audio volume and mute

- next/previous play controls

- switching of camera angles

- access to game statistics

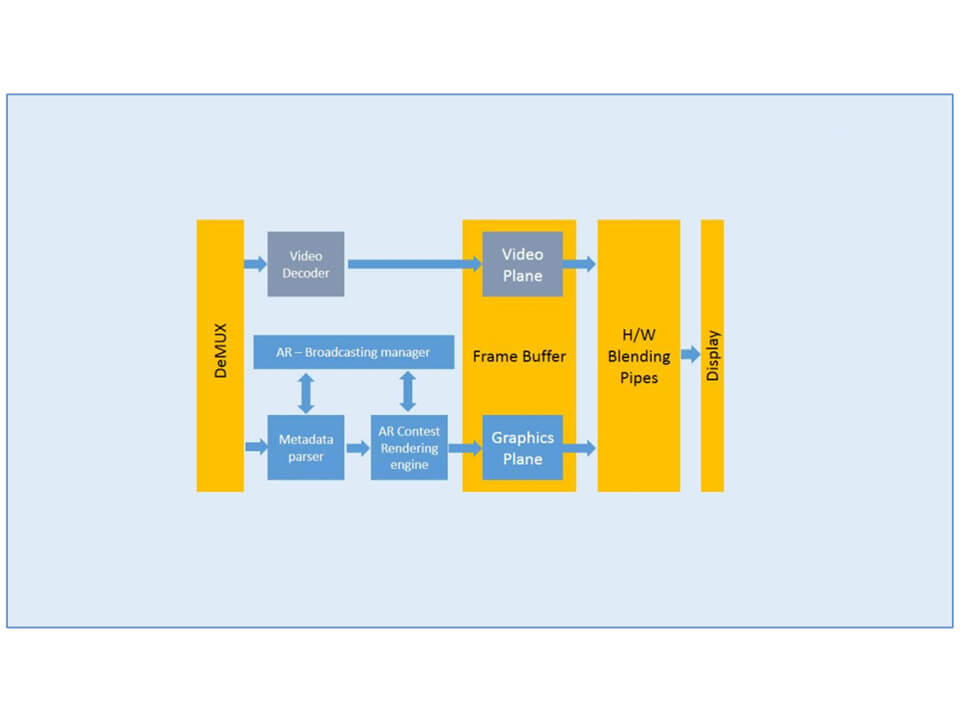

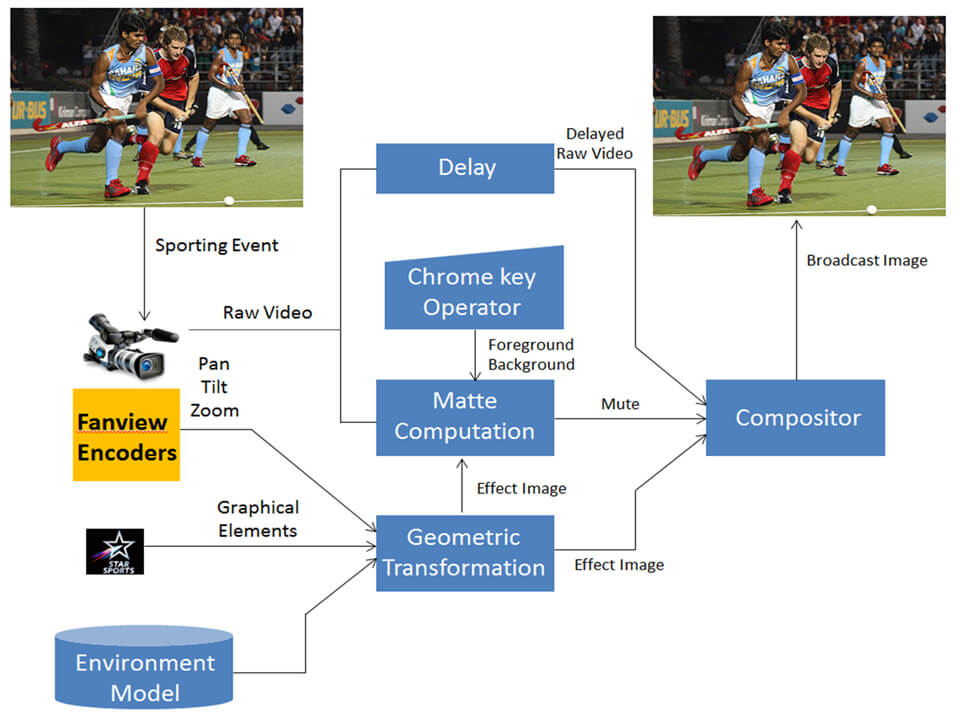

3D Broadcast viewing can be enabled through FanView TV STB

Show below is the architecture for the TV STB for rendering augmented broadcasting content. Rendering engine is the main entity of the solution. It grabs the raw data files and renders the final content layout to be displayed on TV. In the TV STB video and graphics are handled on separate planes. Each plane is an area of graphics memory served as a render target in a display adapter and stores images in proper pixel formats ready to be displayed on a TV screen. The video plane stores the images decoded and rasterized by the video decoder, and the graphics plane stores the images rendered by the 2D/3D graphics-rendering engine.

These two types of images are blended into a single stream of images using hardware display pipelines.

Augmented content and video can be separated using a de-MUX . The metadata will also be parsed to download and graphically render augmented content. According to the synchronization information included in the metadata transmitted from the multiplexing and transmitting server, each rendered image of augmented content should be immediately blended with its counterpart in the video frames.

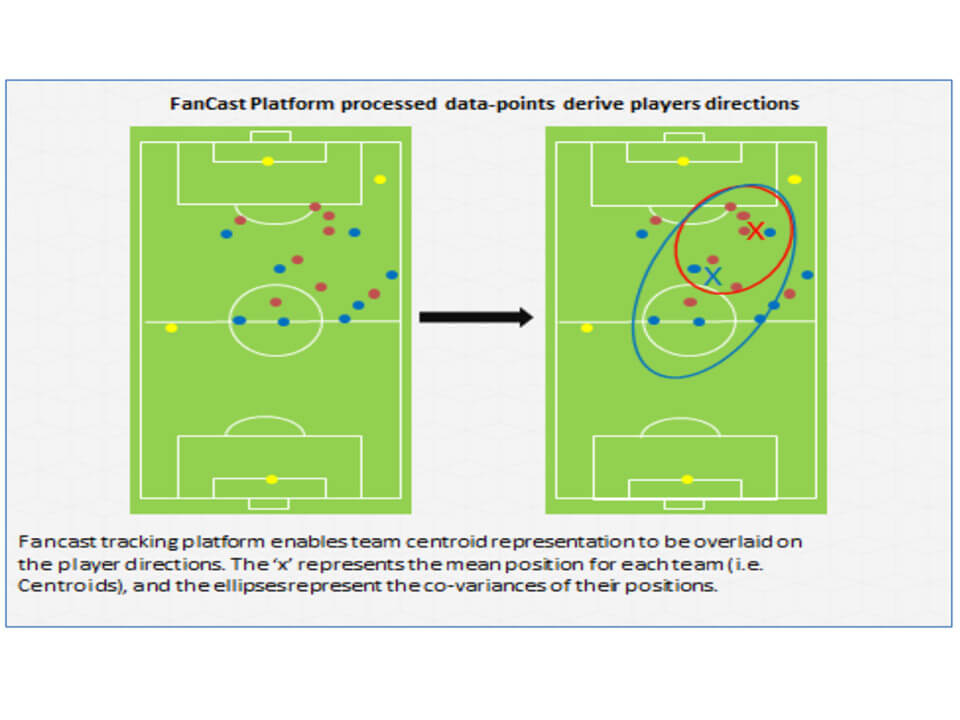

FanView protocol stack framework with rendering engine support enables to view 3D broadcast views on STB TV. STB TV receiver contains an MPEG-x (3D) embedded player. In this way the viewer can interact with the replay, ultimately choosing different viewing angles. The positions of the players are tracked by means of image processing and the data is stored in XML format. Once the XML description of the position updates has been retrieved, the 3D content can be reconstructed in an automated way in real time to generate the 3D MPEG clips.

At the broadcast end the carousel is merged with the live audio and video of the soccer match and broadcast. At the TV-STB end STB runs protocol stack (with MPEG-4 replay capabilities) receives the broadcast and loads and executes the Xlet. When the Xlet is started, it starts “monitoring” for events (received through DVB) and it allows the user to interact with it. The events and clips are generated via “live content production” facilities.

Shown below are some of the use/cases of proposed FanView 2D/3D graphics viewing of player tracking showcased for hockey, soccer (reaction sports).

Epilogue

FanView combines a deep understanding of technology and societal forces to identify and evaluate discontinuities and innovations in the next 3 to 10 years. Our approach to technology forecasting for our products is unique—we put people at the center of our forecasts. Understanding humans as consumers, workers, householders, and community members which allows FanView’s clients to look beyond technical feasibility to identify the value in new technologies, forecast adoption and diffusion patterns, and discover new market opportunities and threats.

Our product development strategy combines a deep understanding of the global technology economy, user behavior, media and emerging media technologies, product delivery system, and societal forces to identify and evaluate emerging trends, discontinuities, and innovations in the next three to ten years. We work with foresights to develop insights and strategic tools to better position ourselves in the marketplace.

In today’s broadcasters’ market place, the reality they face is “Accelerated transformation activities and improved focus on the multiplatform consumer are the keys to unlocking future value”.

The traditional broadcasting industry remains on its transformation journey – and the top level imperatives for broadcasters are much the same as they have been for the last couple of years: to transform into digital, connected B2C businesses. Those imperatives have implications right across organizations.

We at FanView, see 5 key differentiators that every broadcaster should be building into the heart of its business and operating model. They are:

- Play to enhance your strengths

- Build new insight, analytics and audience measurement and monetization capabilities

- Innovate and take calculated risks

- Building new technologies &

- Focus on the audience for increasing their salience

FanView is built for a first rate fan / audience experience which is more important than ever in developing the sophisticated broadcast businesses that investors will value in the future. Delivering high quality, simple, user friendly, familiar and good value product is to capture, retain and monetize the restless audiences across multiple access points which are the fundamental organizational focus for broadcasters. Editorial, production and commercial teams build their strategies around consumers (and are incentivized to do so) and this thinking should become the force that then tears down the walls separating these traditional business silos.

Broadcasters must not lose their heritage in getting powerful, linear content in front of audiences and forging deep emotional connections with those audiences. But the fundamental shift now required is to become true B2C businesses that evolve these audience relationships into direct consumer relationships.

Thus, FanView plays core of the revolution of a broadcaster’s digital transformation.

Successful consumer businesses excel in transaction management, customer service, marketing, product development and supply chain management. With these attributes, broadcasters can start to lay claim to being sophisticated businesses – and find themselves on the right side of the industry share holder value, only by using FanView.

FanView POC platform enables viewers to view Multi-CAM multi-angle views of real-time sports events , platform enables to monetize(Copyright owners,Broadcasters,Tier-1/2/3-Ad-Sponsors) post-match archives video contents on demand basis, pay-per-view, pay-per-season basis

- Proposed POC will exhibit end-to-end streamed videos(SYNCHRONIZED MULTI-ANGLE streams of video to remote viewers) from multicam;multiangle then stitch and knit-it and streams the video capsules to provide an immersive panoramic experience on android TVs and on android tablets simultaneously , this will be viewed by technicians from remote locations , upon problematic scenarios technician takes control of remote control of the camera , then zooms(+/-) , pan-tilt them , while for other viewers it should not show in zoomed format

- To produce a 360° video scene(Panoramic) is captured with multiple cameras that captures all possible viewing angles. These various views are then stitched together into a single high resolution video sphere that is encoded and streamed using existing standards. On the receiving end technicians will be able to choose and dynamically modify their field of view by either moving the display or touching the screen.

- Critical factor video feed streamed from the campus end should not have any glitches,latency,poor-quality video feeds this will impact manual and automated monitoring (Comers selection can be mix and match of analogue/IP type)

- Strip-nail, thumb nail view(Mosaic view) user should be able to see views from Different CAM-angles on the app-screen at the same time , the normal angle continues to play

- Easy toggling should enable the user to switch back and forth different camera angles and to the normal view

- Viewers will be be to access any of the multiple viewing angles of interest. TV users should be able to use their remote control to zoom or pan the entire field/campus to track particular moving object across open field , track and trace video tagged object – App should be able to switch to camera views to ones tracking specific objects for detailed action